Hello readers! In this blog post, our Consultant, Sarthak, delves into the critical topic of Regex Fuzzing vulnerabilities, shedding light on their impact on application security. Regular expressions, while indispensable for tasks like input validation and pattern matching, can become a double-edged sword when improperly designed, leading to risks such as Server-Side Request Forgery (SSRF), Denial-of-Service (DoS) attacks, and open redirects.

This blog takes a comprehensive approach, exploring manual and automated fuzzing techniques, differential fuzzing, and tools like REcollapse, Burp Suite, Ffuf, and Atheris to identify and mitigate these vulnerabilities. We also cover exploitation techniques, real-world scenarios, and best practices such as strict validation, whitelisting over blacklisting, vetted patterns from trusted sources, and multi-layered security approaches to future-proof applications.

By the end, you’ll gain a solid understanding of regex vulnerabilities, how attackers exploit them, and effective mitigation strategies to enhance application security.

TL;DR:

- Regular expressions are powerful but can introduce vulnerabilities like SSRF, DoS, and open redirects, if poorly designed.

- Regex fuzzing helps identify flaws in patterns, enabling attackers to bypass input validation and security controls.

- Common risks include catastrophic backtracking, improper anchoring, and permissive patterns that allow malicious inputs.

- Tools like REcollapse, Burp Suite, and Atheris are essential for fuzzing and testing regex for weaknesses.

- Best practices include strict validation, using whitelists over blacklists, relying on vetted patterns, and adopting multi-layered security approaches. Regular audits, performance testing, and defense-in-depth strategies help future-proof regex-based systems.

Introduction to Regex Fuzzing Vulnerabilities

Regular expressions (regex) are indispensable tools in modern programming, often used for input validation, search-and-replace functions, and pattern matching. Despite their ubiquity, regex patterns are a double-edged sword: they simplify development but often introduce vulnerabilities when poorly written.

Regex fuzzing is a technique to uncover flaws in these patterns, enabling attackers to bypass security controls. Whether it’s for user input validation, firewall rules, or malware detection, a weak regex can result in severe consequences, such as Server-Side Request Forgery (SSRF), open redirects, or even Denial-of-Service (DoS) attacks.

The Role of Regex in Security

Regular expressions are essential for enhancing security across various layers of an organization’s infrastructure. Below are some of their primary applications:

Common Security Use Cases for Regex

- Firewall Configuration:

Regex is often used to define rules that filter out malicious traffic by blocking specific file types, IP ranges, or user-agent strings. - Validating User Input:

Regex helps ensure input data meets specific criteria, preventing injection attacks such as SQL Injection, Cross-Site Scripting (XSS), and open redirects. For example, a regex might restrict usernames to alphanumeric characters only. - Detecting Malware:

Regex patterns can identify malware signatures by scanning for specific strings or file formats that indicate malicious behavior. These patterns are used in Intrusion Detection Systems (IDS) and antivirus software.

The Role of Regex in Security

Poorly designed regex patterns can introduce vulnerabilities, especially if edge cases are not adequately accounted for. Attackers often exploit a single weak point to bypass security measures. Below are some common examples of how faulty regex patterns lead to vulnerabilities.

Examples of Vulnerabilities Caused by Faulty Regex

- Bypassing SSRF Protection:

- Scenario: A regex pattern is used to block internal IP addresses.

- Vulnerable Pattern: ^http?://(127\.|10\.|192\.168\.).*$

This pattern intends to block requests to private IP ranges. However, it overlooks alternative representations of localhost, such as 0.0.0.0. - Exploit: An attacker submits a URL like: https://0.0.0.0

The regex does not match this representation, allowing the request to pass through.

- Open Redirect Exploits:

- Scenario: An application uses regex to validate URLs pointing to examplesite.com with specific file extensions.

- Vulnerable Pattern: ^.*examplesite\.com\/.*(jpg|jpeg|png)$

The use of .* allows attackers to inject arbitrary domains. - Exploit: https://attackersite.com?examplesite.com/abc.png

This input bypasses validation and redirects users to attackersite.com.

Best Practices for Safe Regex with Examples

- Adopt Strict Validation

- Whitelists Over Blacklists: Use a whitelist to define valid inputs explicitly. Blacklists often fail to block unexpected inputs.

- Example of Whitelist Validation for Email: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$

- Valid Input: user@example.com

- Invalid Input: user@malicious-site<script>.com

- Failure of a Blacklist: .*(@spam\.com|@fake\.org)$

- Invalid Match: An attacker uses user@spamz.com, which bypasses this blacklist.

- Limit Input Length: Set length constraints to prevent excessively long inputs that can cause performance degradation.

- Example for Phone Numbers: ^\d{10}$

- Valid Input: 1234567890

- Invalid Input: 123456789012345 (too long)

- Whitelists Over Blacklists: Use a whitelist to define valid inputs explicitly. Blacklists often fail to block unexpected inputs.

- Avoid Publishing Patterns:

Do not expose regex patterns in public repositories, client-side scripts, or error messages. Attackers can use these patterns to craft bypass payloads.- Risk Example: If the regex ^[a-zA-Z0-9_]{3,15}$ is publicly known, an attacker may exploit its lack of special character checks.

- Solution: Keep patterns server-side, and complement with backend checks.

- Use Established Patterns:

Rely on trusted, community-tested patterns from reputable sources like OWASP Regex Repository.- Example from OWASP for Strong Password Validation:

^(?=.*[a-z])(?=.*[A-Z])(?=.*\d)(?=.*[@$!%*?&])[A-Za-z\d@$!%*?&]{8,}$ - Valid Input: Strong@123

- Invalid Input: weakpassword

- Why Established Patterns?

These patterns are vetted against common edge cases and vulnerabilities, reducing the likelihood of bypasses.

- Example from OWASP for Strong Password Validation:

- Use Established Patterns:

Implement Defense-in-Depth: Combine regex with other mechanisms for a multi-layered approach.- Parameterized Queries: Prevent SQL Injection when validating user input in database queries.

- Bad Example (Vulnerable): SELECT * FROM users WHERE username = ‘” + userInput + “‘;

- Good Example (with regex): ^[a-zA-Z0-9_]{3,15}$

Used with: SELECT * FROM users WHERE username = ?; - Content Security Policies (CSPs): Apply regex to validate script sources in a CSP.

- Example Regex for Trusted Domains: ^https://(trusted\.site|cdn\.example)\.com/.*$

- Conduct Fuzz Testing: Test regex patterns by submitting edge cases and malicious inputs to uncover vulnerabilities.

- Example of Fuzz Inputs for Regex: ^.*example\.com.*$

- Fuzzed Inputs:

- https://attacker.com?example.com → Passes validation

- %0Aexample.com → Passes validation due to newline injection

- Fuzz Testing Tools:

- Use REcollapse to generate payloads: recollapse -p 2,3 -e 1 https://example.com

- Test payloads with Burp Suite Intruder.

- Improved Pattern: ^https://example\.com/.*$

- Prevents bypasses from newline injection or subdomain tricks.

Core Concepts of Regex Vulnerabilities

- Regex Vulnerabilities:

Mistakes in pattern creation can expose systems to bypasses, leading to issues like SSRF (Server-Side Request Forgery), open redirects, and more.

Examples include catastrophic backtracking, improper boundaries, and excessive resource consumption. - Fuzzing:

A technique that generates inputs to test the behavior of regex patterns. Fuzzing helps identify vulnerabilities like bypasses, performance issues, or incorrect matches.

Tools like Atheris, Burp Suite, REcollapse, and SDL Regex Fuzzer are pivotal in automating regex testing. - Bypass:

Exploiting logical flaws in regex patterns to bypass validation or execute unintended operations.

Common methods include case manipulation, special character injection, newline-based bypass, and exploiting backtracking.

Common Regex Vulnerabilities and Exploits

- Catastrophic Backtracking (ReDoS)

Catastrophic backtracking occurs when a regex engine evaluates a pattern with excessive combinations due to nested quantifiers.- Example: Vulnerable Regex: (a+)+

- Exploit: A long string of a followed by a character that doesn’t match, like – aaaaaaaaaaaaaaaa!

- Attack: The engine tries every possible way to match, leading to exponential processing time. The regex engine enters exponential backtracking, causing denial of service.

- Impact: If such a regex is used in a high-traffic application, a crafted input could bring the server to a halt.

- Solution: Use atomic groups: (?>a+)+

Employ possessive quantifiers: (a++)

- Improper Anchoring

Using ^ and $ without considering their limitations can lead to bypasses. These symbols match the beginning and end of lines, not necessarily the input string.- Example: Vulnerable Regex: (^a|a$)

- Bypass: %20a%20

- Solution: Use \A (start of string) and \Z (end of string) for stricter anchoring.

- Case-Sensitivity Issues

Regex patterns without case-insensitive modifiers ((?i:) or /i) allow bypass via case changes.- Example: Vulnerable Regex: http

- Bypass: hTtP

- Solution: Add the case-insensitivity modifier or normalize inputs before validation.

- Improper Use of Dot (.)

The dot operator matches any character except newlines. If newline handling isn’t explicitly considered, bypasses are possible.- Example: Vulnerable Regex: a.*b

- Bypass: a%0Ab

- Solution: Use . carefully or include (?s) for multiline matching.

- Overuse of Greedy Quantifiers

Greedy quantifiers (*, +, {}) consume as much input as possible, leading to potential bypasses or incorrect matches.- Example: Vulnerable Regex: a{1,5}

- Bypass: aaaaaa

- Solution: Use lazy quantifiers where applicable (*?, +?, {n,m}?).

- Insufficient Input Scope

A regex is applied only to query parameters for input validation but ignores other sources of input like headers or cookies.- Example: Vulnerable Regex: ^[a-zA-Z0-9_]+$

Used to validate a username query parameter. - Exploit: An attacker provides malicious input via a cookie or a custom header:

- Cookie: username=<script>alert(‘xss’)</script>

Since the regex is applied only to the query parameter, this malicious input bypasses validation. - Solution: Apply regex validation to all relevant input sources, such as:

- Query parameters

- Cookies

- Headers

- Request body

- Improved Validation: ^[a-zA-Z0-9_]{3,20}$

Apply to all inputs in server-side middleware or request handling logic. - Example in Node.js:

function validateInput(input) {

const regex = /^[a-zA-Z0-9_]{3,20}$/;

if (!regex.test(input)) {

throw new Error('Invalid input');

}

}

app.use((req, res, next) => {

try {

validateInput(req.query.username);

validateInput(req.cookies.username);

validateInput(req.headers['x-username']);

next();

} catch (err) {

res.status(400).send(err.message);

}

});

- Example: Vulnerable Regex: ^[a-zA-Z0-9_]+$

- Improper Escaping and Logic Errors

- Example: Vulnerable Regex: ^a|b$

This pattern is intended to match:

Strings starting with a

Strings ending with b - Exploit: The input ab will match because the regex fails to group conditions properly.

- Solution: Fix the regex by grouping conditions explicitly:

^(a|b)$

This pattern correctly matches only a or b.

- Example: Vulnerable Regex: ^a|b$

Fuzzing

Fuzzing is the process of testing regex patterns by generating inputs to uncover vulnerabilities.

Purpose of Fuzzing

- Discover Bypasses: Identify inputs that should fail validation but pass.

- Detecting Performance Issues: Identify patterns prone to catastrophic backtracking.

- Validate Logic: Ensure regex behaves as expected across all scenarios.

Techniques

- Manual Fuzzing

- Developers can manually craft edge cases to test regex patterns for vulnerabilities.

- Example: Test for inputs like a%0Ab (newline injection) or \t (tab character).

- Tools: Regex101, Regexpal.

- Approach:

- Test common bypass inputs: empty strings, long strings, newline injections.

- Debug using a regex debugger to view the matching process.

- Manual Ways to Test Regex Vulnerabilities

Manual fuzzing is a developer-driven process to craft and test specific inputs against regex patterns. This approach is essential for understanding how a pattern behaves with edge cases and identifying potential vulnerabilities.

| Category | Vulnerable Regex | Test Input | Problem | Solution |

| Performance Testing | (a+)+ | “aaaaa!” | Catastrophic backtracking | (?>a+)+ |

| Input Scope Coverage | ^[a-zA-Z0-9_]{3,20}$ | Header: invalid! | Missing validation for headers | Validate all inputs consistently |

| Logical Accuracy | `(^a | a$)` | “%20a%20” | Improper anchoring matches lines |

| Edge Case Handling | a.*b | “a%0Ab” | Matches newline injections | a[^\n]*b |

- Automated Fuzzing

Automated tools generate large datasets to test regex patterns systematically.- Key Tools:

- Atheris: A Python-based fuzzer that uses coverage-guided techniques.

- SDL Regex Fuzzer: Detects catastrophic backtracking patterns in .NET regexes.

- Ffuf: A fuzzing tool that can test regexes against web applications.

- REcollapse: Designed for regex vulnerability discovery.

These tools simulate potential attacks and highlight regex flaws.

- Key Tools:

- Differential Fuzzing

Involves comparing the outputs of two regex implementations (e.g., regex library vs. standard parser) for discrepancies.- Example: Fuzz input is processed by the application regex and Python’s urllib to detect mismatches. The regex output from the application is then compared with Python’s re module to identify inconsistencies or potential vulnerabilities.

- Performance Testing

Regexes are tested for computational efficiency. Regexes with exponential time complexity, even without a security impact, can degrade performance under load.

Key Fuzzing Tools

- Atheris: A Python-based coverage-guided fuzzing engine.

- SDL Regex Fuzzer: Specializes in detecting catastrophic backtracking.

- REcollapse: Helps identify logical flaws and bypasses.

- Burp Suite: Custom regex payloads for input testing in web applications.

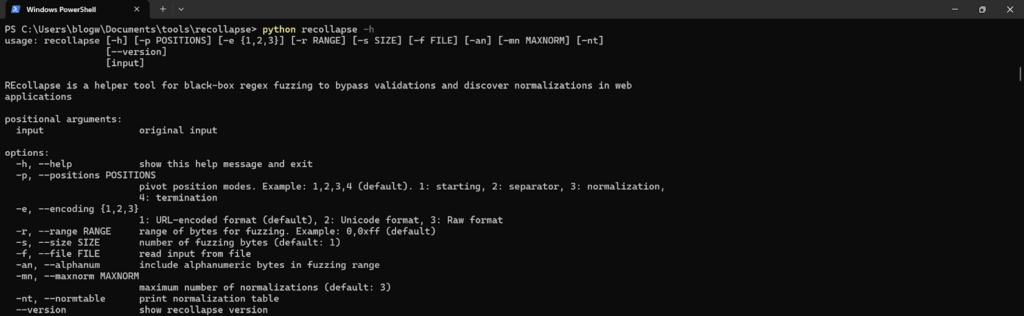

What is REcollapse?

REcollapse is a helper tool for regex fuzzing that generates payloads to test how web applications handle input validation, sanitization, and normalization. It helps penetration testers:

- Bypass Web Application Firewalls (WAFs).

- Identify vulnerabilities caused by weak regex implementations.

- Uncover normalization inconsistencies across application endpoints.

Note: REcollapse focuses on payload generation. Use tools like Burp Suite Intruder, Ffuf, or Postman to send and analyze these payloads effectively.

Why REcollapse?

Modern applications rely heavily on regex validation to sanitize inputs. However:

- Regexes are reused: Developers copy patterns from online resources without testing edge cases.

- Regex behavior differs: Regex libraries in Python, JavaScript, and Ruby handle patterns differently.

- Normalization issues: Applications normalize input inconsistently, leading to potential exploitation.

Installation and Setup of REcollapse

- Requirements

- Python 3.x

- Docker (optional, for isolated testing environments)

- Installation

- Clone the repository:

git clone https://github.com/0xacb/recollapse.git

cd recollapse- Install dependencies:

pip3 install --user --upgrade -r requirements.txt- Docker setup:

docker build -t recollapse .- Verify installation:

recollapse -h

Understanding Key Concepts of REcollapse

Regex Pivot Positions

REcollapse targets specific positions within input strings to maximize bypass potential:

- Start Position (1): The beginning of the input.

- Separator Positions (2): Around special characters like . or /.

- Normalization Positions (3): Bytes affected by normalization (e.g., ª → a).

- Termination Position (4): The end of the input.

Example:

Input: this_is.an_example

- Start fuzzing: $this_is.an_example

- Separator fuzzing: this$_$is$.$an$_$example

- End fuzzing: this_is.an_example$

Encoding Formats

- URL Encoding: %22this_is.an_example

Use for application/x-www-form-urlencoded or query parameters. - Unicode Encoding: \u0022this_is.an_example

Use for application/json. - Raw Format: “this_is.an_example

Use for multipart/form-data.

Case Studies

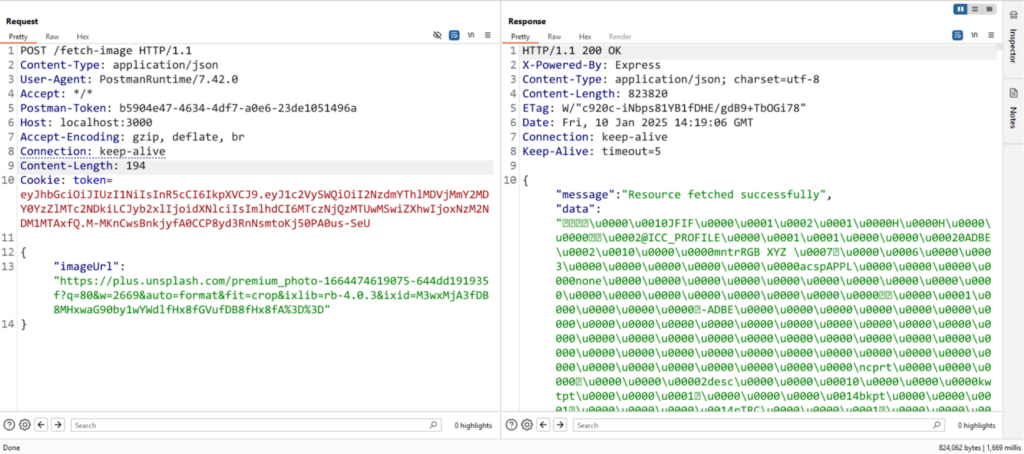

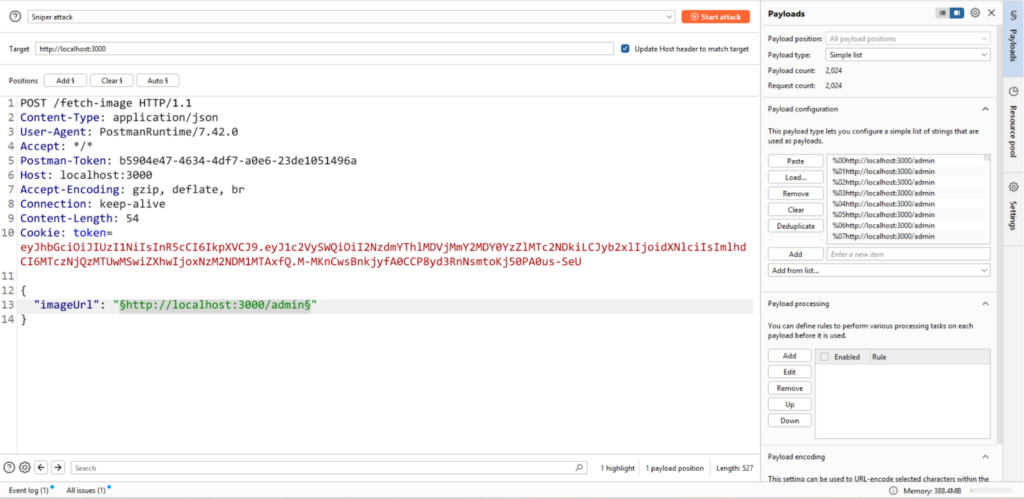

Case Study 1: Bypassing Localhost Restriction

Scenario: A /fetch-image endpoint is designed to fetch images from a URL provided by the user. To prevent abuse, the application blocks requests targeting sensitive paths like http://localhost:3000/admin.

The application fetches an image when a URL is provided:

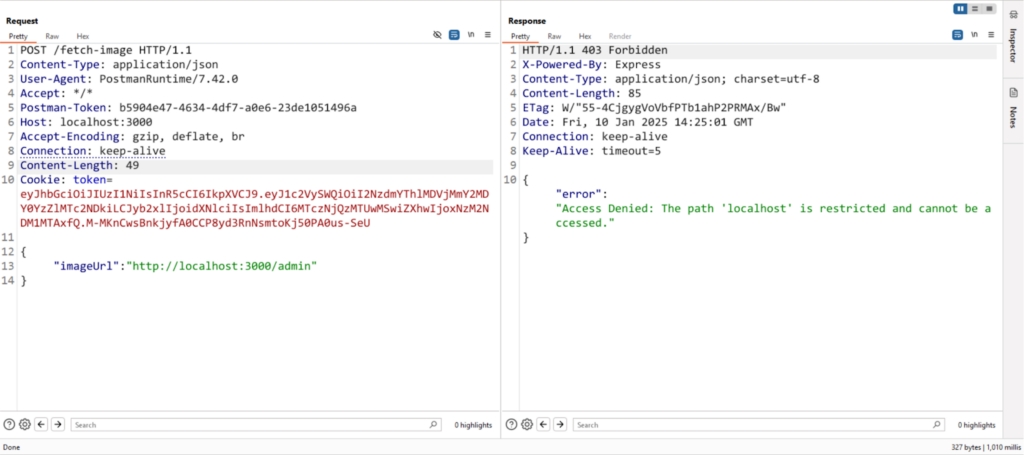

A direct POST request with a JSON body such as:

{ "imageUrl": "http://localhost:3000/admin" }

returns an error:

Access Denied: The path 'localhost' is restricted and cannot be accessed.

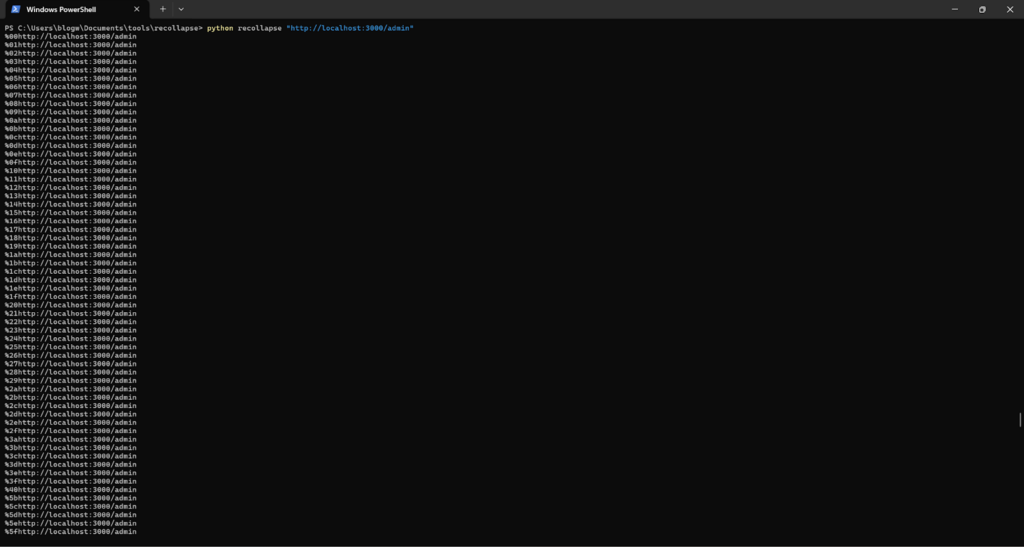

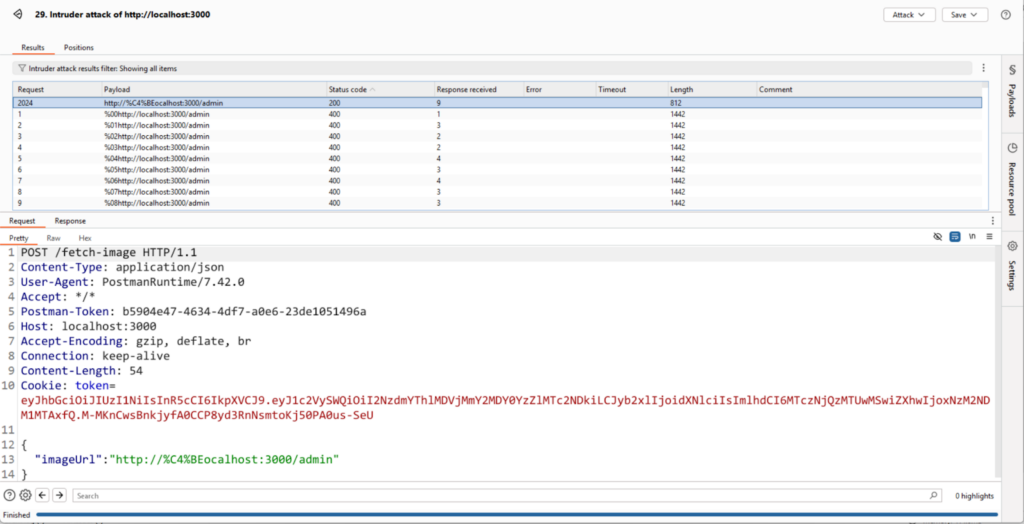

Exploitation: Using REcollapse, we generate payloads that manipulate the localhost string with encoding and normalization tricks. These payloads are tested using tools like Burp Suite Intruder.

Then use the intruder and provide the list of payloads.

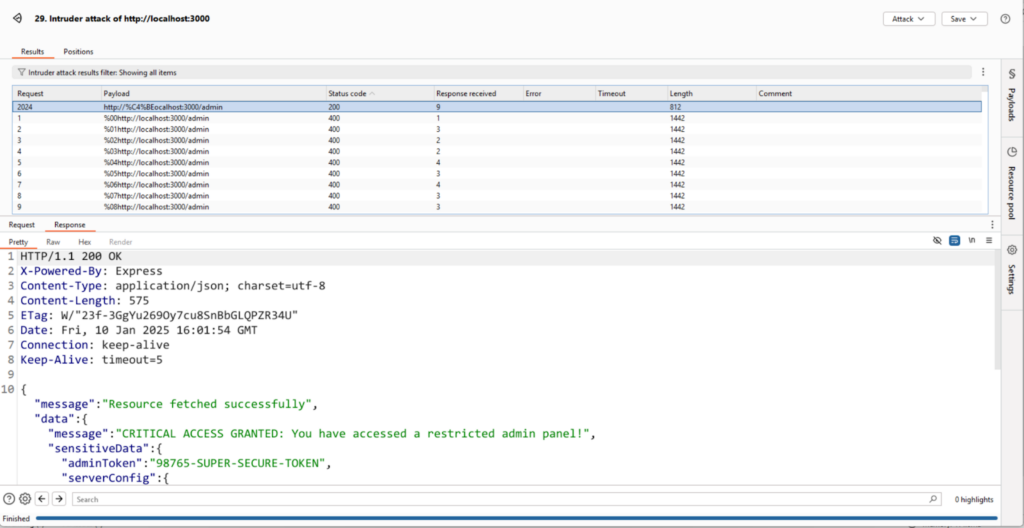

We found that there is one payload that is giving ‘200 OK’ and bypassed the regex through REcollapse.

http://%C4%BEocalhost:3000/admin

Result: This payload bypasses the regex validation because the application strictly checks for the literal localhost string and fails to account for encoded variants.

Impact: The attacker gains unauthorized access to restricted paths, potentially exposing sensitive data or functionality.

Mitigation:

- Normalize input before validation to decode encoded characters.

- Use stricter regex patterns to match all possible representations of restricted values.

- Implement defense-in-depth with additional security checks.

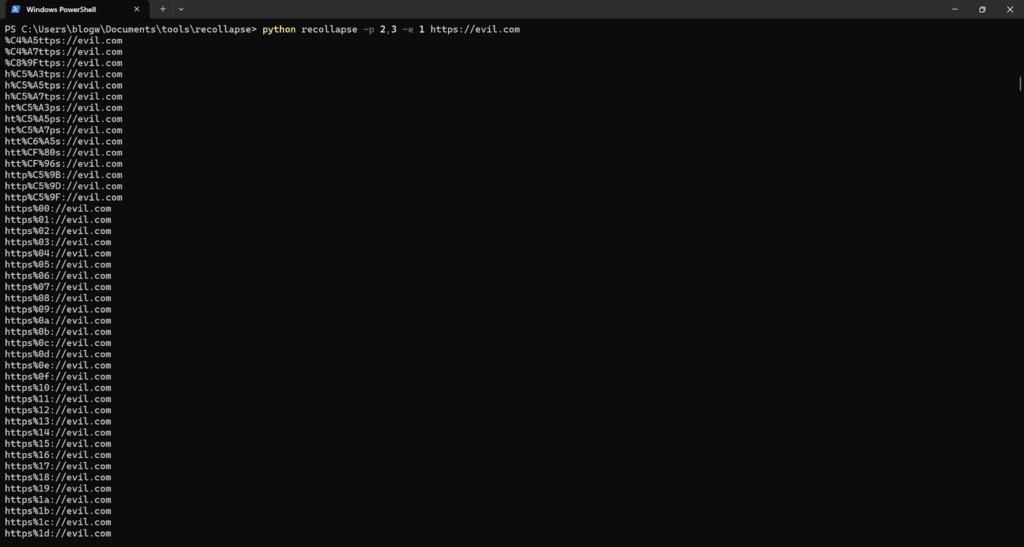

Case Study 2: WAF Bypass

Scenario: A Web Application Firewall (WAF) is configured to block requests targeting https://evil.com.

Exploitation: REcollapse generates payloads targeting specific positions in the string and introduces encoding or normalization tricks.

Approach:

- Generate payloads:

recollapse -p 2,3 -e 1 https://evil.com

- Analyze results with Burp Intruder.

Result: The payload bypasses the WAF because it fails to decode and match the normalized string evil.com.

Impact: The attacker circumvents security measures, potentially launching phishing attacks or malicious redirects.

Mitigation:

- Use input sanitization that decodes and validates URLs in all possible formats.

- Combine regex validation with URL parsing libraries for robust validation.

Case Study 3: Open Redirect

Scenario: A regex is used to allow only URLs pointing to examplesite.com and ending with specific extensions like .jpg, .jpeg, or .png.

Vulnerable Regex: ^.*examplesite\.com\/.*(jpg|jpeg|png)$

Exploitation: The permissive .* in the regex allows attackers to craft payloads that bypass the validation.

Bypass: https://attackersite.com?examplesite.com/abc.png

Result: The payload bypasses validation and redirects users to a malicious site while appearing legitimate.

Impact: Victims may unknowingly interact with a malicious site, leading to phishing attacks or malware downloads.

Mitigation:

- Avoid overly permissive patterns like .*.

- Use strict regex validation with specific boundaries:

^https://examplesite\.com/[a-zA-Z0-9_-]+\.(jpg|jpeg|png)$

Case Study 4: SSRF

Scenario: An application uses regex to block requests to internal IP ranges for mitigating Server-Side Request Forgery (SSRF) attacks.

Vulnerable Regex: ^http?://(127\.|10\.|192\.168\.).*$

Exploitation: The regex fails to account for alternative representations of internal IPs like 0.0.0.0.

Bypass: https://0.0.0.0

Result: The payload bypasses validation and allows the attacker to send requests to internal services.

Impact: The attacker can access sensitive internal services, potentially exposing private data or gaining unauthorized control.

Mitigation:

- Use a comprehensive IP validation library instead of relying solely on regex.

- Include all valid representations of internal IPs in the validation logic.

Summary of Case Studies

| Case Study | Vulnerability | Exploitation | Impact | Mitigation |

| Localhost Restriction Bypass | Insufficient validation of encoded values | Encoded payload %C4%BEocalhost | Unauthorized access to restricted paths | Normalize input and use stricter regex patterns |

| WAF Bypasse | Regex fails to handle encoding | Payload https://evil.%e7om bypasses WAF | Circumvention of security measures | Decode and validate inputs in all formats |

| Open Redirect | Overly permissive regex pattern | Payload https://attackersite.com?examplesite.com/abc.png | Phishing or malware attacks | Use strict regex boundaries and URL parsing |

| SSRF Exploitation | Incomplete validation of IPs | Payload https://0.0.0.0 bypasses validation | Access to internal services | Use IP validation libraries or comprehensive regex |

Mitigation Strategies for Regex Vulnerabilities

To ensure regex patterns are secure, efficient, and reliable, a combination of validation practices, debugging tools, and layered security mechanisms must be employed. Below is a detailed exploration of the recommended strategies.

- Strict Validation

Regex patterns should adhere to the principle of least privilege, allowing only the exact input required for the application to function.- Whitelists Over Blacklists:

- Why? Blacklists try to block harmful inputs but can miss unexpected attack vectors. Whitelists ensure only predefined acceptable inputs are allowed.

- Example: Whitelist for email validation: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$

Blacklist might fail to block encoded or obfuscated malicious payloads.

- Limit Input Length: Long inputs can trigger performance issues, especially with patterns prone to catastrophic backtracking.

- Example: For a phone number field, limit to 10 digits: ^\d{10}$

- Enforce Character Constraints: Define acceptable character sets explicitly. Avoid patterns like .* that allow unrestricted input.

- Example: For usernames, allow only alphanumerics and underscores: ^[a-zA-Z0-9_]{3,20}$

- Whitelists Over Blacklists:

- Validated Patterns

Using pre-vetted regex patterns from reliable sources minimizes the risk of introducing vulnerabilities.- OWASP’s Regex Repository:

- Provides community-vetted regex patterns for common use cases like email validation, password strength checks, and more.

- Example: Email validation: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+.[a-zA-Z]{2,}$

- Advantages:

- Patterns are tested across edge cases.

- Reduces the time and expertise required to create secure regex patterns.

- OWASP’s Regex Repository:

- Regex Debugging

Debugging tools are essential for understanding regex behavior and identifying potential vulnerabilities or inefficiencies.- Regex101:

- Interactive regex testing platform..

- Features: Explanation of regex behavior, test input matching, and performance metrics.

- RegexBuddy:

- Advanced tool for creating, testing, and optimizing regex patterns.

- Allows developers to view the step-by-step execution of regex matches, making it easier to identify issues like catastrophic backtracking.

- Practical Use: Before deploying regex patterns, test with realistic inputs and edge cases using these tools.

- Regex101:

- Defense-in-Depth

Regex validation alone is insufficient for robust security. Combine it with other measures to ensure comprehensive protection.- Content Security Policies (CSPs):

- Prevent the execution of unauthorized scripts, adding a layer of defense against attacks like XSS.

- Parameterized Queries:

- Use prepared statements for database operations to avoid injection attacks.

- Example Workflow:

- Input is validated with regex.

- Sanitized input is further processed with parameterized queries or stored securely.

- Content Security Policies (CSPs):

- Performance Testing

Regex patterns should be tested for computational efficiency to prevent denial-of-service attacks like ReDoS (Regular Expression Denial of Service).- Profiling Tools:

- Monitor regex execution time under load.

- Identify patterns prone to excessive backtracking.

- Tools like Regex101 and Atheris provide performance metrics for patterns.

- Best Practices:

- Avoid nested quantifiers (e.g., (a+)+).

- Use possessive quantifiers or atomic groups to reduce backtracking: (?>a+)+

- Profiling Tools:

Tools for Regex Security

- Regex Debuggers

- Regex101:

- Free, web-based platform for interactive regex testing.

- Features include detailed explanations, regex flags, and real-time input matching.

- Best For: Beginners and intermediate developers needing quick testing and debugging.

- RegexBuddy:

- Comprehensive desktop application for advanced regex analysis.

- Features include performance profiling, optimization tips, and interactive debugging.

- Best For: Professionals working on complex regex patterns.

- Regex101:

- Fuzzing Tools

Automated fuzzing tools test regex patterns with random or structured inputs to identify vulnerabilities.- Atheris:

- Python-based fuzzer using coverage-guided techniques.

- Simulates realistic attack scenarios to uncover regex flaws.

- REcollapse:

- Generates payloads for bypassing regex-based validation.

- Focused on identifying logic flaws and encoding inconsistencies.

- Atheris:

- Libraries and Resources

- OWASP Regex Repository:

- A curated collection of secure regex patterns for common use cases.

- Reduces the need for custom regex development.

- RegexMagic:

- Designed for non-developers, this tool generates regex patterns based on user input requirements.

- OWASP Regex Repository:

Conclusion

Regex, while powerful, can introduce critical vulnerabilities when poorly designed. To secure applications:

- Understand vulnerabilities like catastrophic backtracking and improper anchoring.

- Use fuzzing tools to uncover bypasses and inefficiencies.

- Follow best practices, including strict validation and vetted patterns.

- Employ defense-in-depth strategies and conduct regular audits to adapt to emerging threats.

By implementing these strategies, developers can leverage regex safely and effectively while minimizing risks.

References

- Regex101: https://regex101.com

- REcollapse GitHub Repository: https://github.com/0xacb/recollapse

- Burp Suite (by PortSwigger): https://portswigger.net/burp

- Atheris (Python-based fuzzer): https://github.com/google/atheris

- Ffuf (Fuzzing Tool): https://github.com/ffuf/ffuf